Opinion: Social Media Platforms Need Disclaimers On Graphic Content

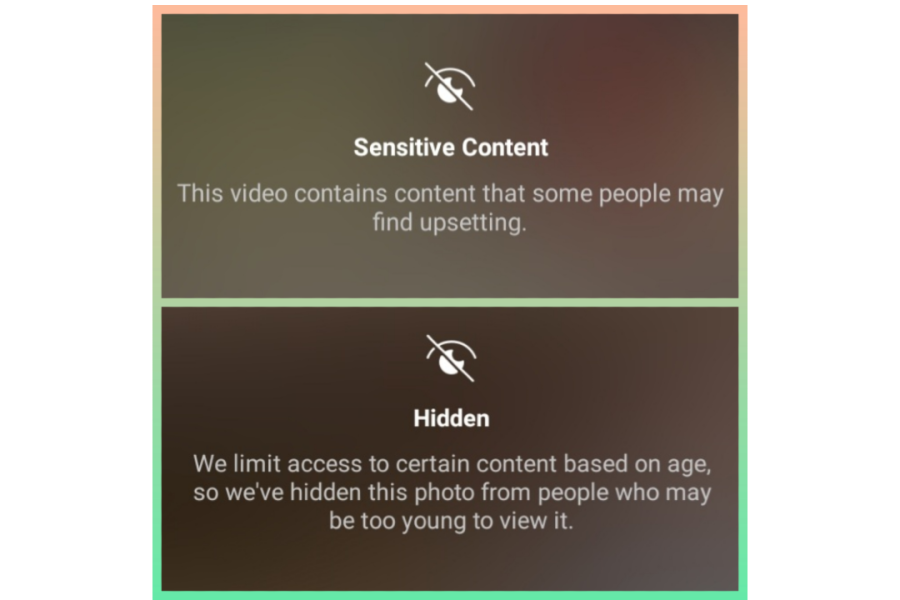

Social media filters like those of Instagram, pictured above, help provide warnings for viewers.

On March 24, fourteen-year-old Missouri teen Tyre Sampson fell off Free Fall, a drop tower attraction at ICON Park in Orlando, Florida. Footage of the incident went viral over social media and I, unfortunately, caught the incident as I was scrolling through Twitter.

I was one of the millions of people who saw this video, and I was left without words. I believe this type of content shouldn’t be shared online, especially on social media. The Orange County Sheriff’s Office has attempted to remove the video but hasn’t been able to remove it successfully.

“While users try their best to protect themselves from seeing graphic images, social media companies are under no legal responsibility to remove them,” says UF Professor of Social Media Andrew Selepak. “There’s no rule, no law that requires social media companies to censor anything or determine what is even too sensitive,” Selepak said.

Because social media platforms are not publishers, they are not responsible for their user’s post content. Most social media platforms use moderators. Moderators work to find content that violates their policies. But the number of moderators does not equal the number of posts shared daily.

Twitter, for example, has a “graphic violence” policy, but when you try to report the ICON Park video, you are met with a list of options that do not include the said “graphic violence” option.

I prefer social media companies to make sure the feeds are cleansed from disturbing videos and images. Social media controlling what gets posted and what doesn’t would lead to protests because users will not have the freedom to post what they want. And that is what the First Amendment is all about. We all have the freedom to say and post what we want on social media as long as it does not break their community guidelines.

One solution can be to implement a disclaimer that states, “This video contains content that people may find upsetting” on content that meets the criteria.

Having a disclaimer prevents the video from auto-playing, and it gives the viewer the option to continue if they want to watch the video or back out before the video starts playing.

It can be beneficial to avoid millions of people feeling disturbed by watching content they don’t want to see.

Robert Maldonado Rodriguez is our Social Media Editor from Puerto Rico. Robert is pursuing his A.S. in New Media Communications. He joined Valencia Voice...